Vegetation Masks (VM) can be a convenient way to allocate different vegetation types to an area. However, they don’t really play nicely with other Vegetation Studio features such as Vegetation Mask Lines (ML). The problem is that whatever vegetation you include in a VM will always override any attempts to use an ML to exclude vegetation. What does that all really mean? If you add a road to a scene then naturally you don’t want a huge tree in the middle of it. So you assign the road an ML and exclude all trees, simple. But if the trees are there because the road overlaps a VM then you’ll still have a forest in the middle of your road.

The answer to this is to use Biome Masks (BM). A BM fulfills a similar role, you can assign it different vegetation (along with lots of other options). A ML will correctly remove any vegetation from a BM and therefore you can have tree-free roads.

“Oh no, but I have created lots of VMs”

You may have a scene that you’ve painstakingly created using VMs, or perhaps you’ve used the Real World Terrain asset which generates VMs from the underlying texture (nice). If you have, then you can use the following script to convert VMs to BMs.

Instructions:

- Create your Biome in Vegetation Studio (Pro), remember the Biome type

- Create an empty game object in your scene for every type of Vegetation Mask; e.g. Grasses, Trees. This will be the Biome’s parent object

- Assign the script to the parent game object containing your VMs

- Select the Biome type from (1)

- Enter the “Starts With Mask” to find all the VMs that start with that name. So if you have VMs called “Grass 12344”, “Grass 54323”, etc, you would enter “Grass”

- Assign the associated empty game object from (2), i.e. drag that node into “Biome Parent”

- Repeat 3-6 for each type of conversation you want. E.g. another one with a “Starts With Mask” of “Tree”

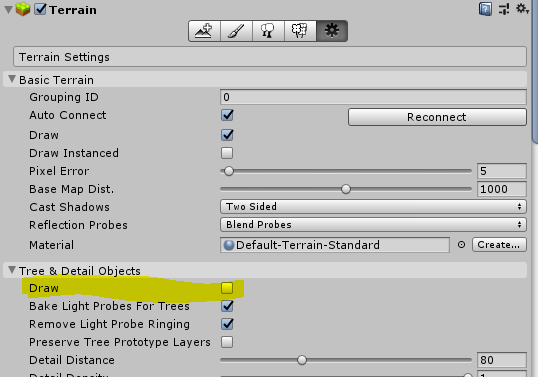

- To run a conversion select the “Should Run” checkbox

Once run you should find the converted Biome Masks under the appropriate parent objects. The original Vegetation Masks are still there but have been disabled.

using System.Collections;

using System.Collections.Generic;

using AwesomeTechnologies.VegetationSystem;

using AwesomeTechnologies.VegetationSystem.Biomes;

using UnityEngine;

namespace CBC

{

[ExecuteInEditMode]

public class VegToBiomeMask : MonoBehaviour

{

[SerializeField]

Transform biomeParent;

[SerializeField]

BiomeType biomeType;

[SerializeField]

string startWithMask;

[SerializeField]

bool shouldRun = false;

void Update()

{

if (shouldRun)

{

var defaultGameObject = new GameObject();

shouldRun = false;

var childMasks = gameObject.GetComponentsInChildren();

foreach(var childMask in childMasks)

{

if (childMask.enabled && childMask.name.StartsWith(startWithMask))

{

var nodes = childMask.Nodes;

var biomeMaskGameObject = Instantiate(defaultGameObject, biomeParent.transform);

biomeMaskGameObject.transform.position = new Vector3(childMask.transform.position.x, childMask.transform.position.y, childMask.transform.position.z);

biomeMaskGameObject.name = "B" + childMask.name;

var biomeMaskArea = biomeMaskGameObject.AddComponent();

biomeMaskArea.ClearNodes();

foreach (var node in nodes)

{

biomeMaskArea.AddNode(new Vector3(node.Position.x, node.Position.y, node.Position.z));

}

// for some strange reason you have to reassign the positions again otherwise they all have an incorrect offset??

for (int x = 0; x < nodes.Count; x++)

{

var node = nodes[x];

var bNode = biomeMaskArea.Nodes[x];

bNode.Position = new Vector3(node.Position.x, node.Position.y, node.Position.z);

}

biomeMaskArea.BiomeType = biomeType;

childMask.enabled = false;

}

}

}

}

}

}