One of the crucial areas for a Bot development is how to handle state, v4 has a new approach to it

V3 State Handling

Probably the easiest way to access state data is from the context object, or more specifically the IBotData aspect of IDialogContext. This provides access to the three main state bags; Conversation, Private Conversation and User.

context.PrivateConversationData.SetValue("SomeBooleanState", true);

If you do not have access to the context then you can load the state directly from the store. The implementation of the store is left open, this example is using a CosmoDB store;

var stateStore = new CosmosDbBotDataStore(uri, key, storeTypes: types);

builder.Register(c => store)

.Keyed(AzureModule.Key_DataStore)

.AsSelf()

.SingleInstance();

IBotDataStore botStateDataStore;

...

var address = new Address(activity.From.Id, activity.ChannelId, activity.Recipient.Id, activity.Conversation.Id, activity.ServiceUrl);

var botState = await botStateDataStore.LoadAsync(address, BotStoreType.BotPrivateConversationData, CancellationToken.None);

It’s an okay solution, but v4 decided to go in a slightly different direction.

V4 State Handling

v4 encourages us to be more upfront about what our state looks like rather than simply hiding it in property bags. Start off by creating a class to hold the state properties you want to access. This requires using IStatePropertyAccessor

public IStatePropertyAccessor FavouriteColourChoice { get; set; }

At practically the earliest point of the life-cycle, configure the state in the startup;

public void ConfigureServices(IServiceCollection services)

{

services.AddBot(options =>

{

...

// Provide a 'persistent' store implementation

IStorage dataStore = new MemoryStorage();

// Register which of the 3 store types we want to use

var privateState = new PrivateConversationState(dataStore);

options.State.Add(privateState);

var conversationState = new ConversationState(dataStore);

options.State.Add(conversationState);

var userState = new UserState(dataStore);

options.State.Add(userState);

...

Now for the real v4 change. On each execution of the bot, or ‘Turn’, we register the specialized state that we are going to expose;

// Create and register state accesssors.

// Acessors created here are passed into the IBot-derived class on every turn.

services.AddSingleton(sp =>

{

// get the options we've just defined from the configured services

var options =

sp.GetRequiredService<IOptions>().Value;

...

// get the state types we registered

var privateConversationState =

options.State.OfType(PrivateConversationState).First();

var conversationState = options.State.OfType(ConversationState).First();

var userState = options.State.OfType(UserState).First();

// now expose our specialized state via an 'StateAccessor'

var accessors = new MySpecialisedStateAccessors(

privateConversationState,

conversationState,

userState)

{

FavouriteColourChoice = userState.CreateProperty("BestColour"),

MyDialogState = conversationState.CreateProperty("DialogState"),

...

};

The state accessor is then made available to each Turn via the constructor;

public MainBotFeature(MyUserStateAccessors statePropertyAccessor)

{

_userStateAccessors = statePropertyAccessor;

}

...

public async Task OnTurnAsync(ITurnContext turnContext,

CancellationToken cancellationToken =

default(CancellationToken))

{

...

var favouriteColour = await _userStateAccessors.FavouriteColourChoice.GetAsync(

turnContext,

() => FavouriteColour.None);

...

// Set the property using the accessor.

await _userStateAccessors.CounterState.SetAsync(

turnContext,

favouriteColourChangedState);

// Save the new turn count into the user state.

await _userStateAccessors.UserState.SaveChangesAsync(turnContext);

...

Migrating data

If you are already running a v3 Bot then migrating state data will depend on how you implemented your persistent state store, which is a good thing. It’s still basically a property bag under the covers so with a little bit of testing you should be able to migrate without too many issues – should 😉

Also you can now watch

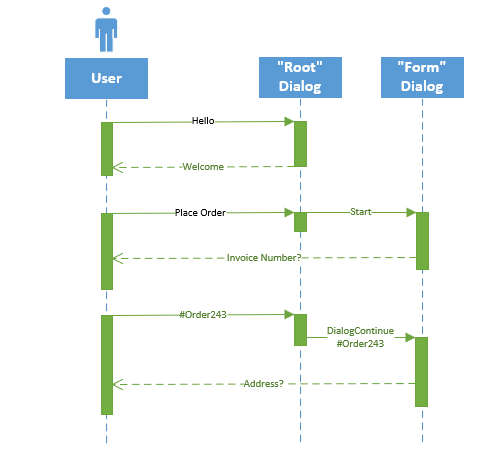

Bot Framework Dialogs and Non accessor state